I was working on rebuilding my VMware lab environment today and for simplicity’s sake decided to leverage two external Platform Services Controllers (PSC), one for each of my vCenter environments (I need two because I am setting up Site Recovery Manager) in a federated manner. Akin to the previous term of “linked mode” If you are not familiar with PSC which was added in vSphere 6.0, check out this KB. I went with the 3rd deployment model illustrated in this KB. Continue reading “Pure Storage vSphere Web Client Plugin and Multiple vCenters”

FlashArray and VMware documentation update for vSphere 6

I have completed updates for two of my main VMware vSphere documents for the Pure Storage FlashArray. These include the standard best practices document and the white paper explaining VAAI in detail and how it works on the FlashArray.

The best practices document has mainly been updated with information that this blog has shown in the past couple of months. Notably:

- vSphere 6 updates, support for Web Client Plugin versions, changes in virtual disk recommendations, in-guest UNMAP support, etc

- VMFS UNMAP changes when it comes to best practice recommendations

- vRealize Operations Management Pack

- EFI-enabled VMs and Disk.DiskMaxIOSize

In the VAAI document, it is a similar update:

- vSphere 6 changes, mainly focused on the thin virtual disk XCOPY enhancements

- UNMAP changes, block counts, performance and in-guest support (EnableBlockDelete)

Both documents are also updated for FlashArray//m, but it is mainly a cosmetic change as nothing really changes for the VMware environment, no recommendations are changed. Of course the documents are also cleaned up and re-arranged to be more reader friendly with a semi-new format as well.

Important! If you have old versions of these documents, delete them! These get updated frequently (a few times a year at least) and these changes can be important. When needing to refer to the guides, please check back to the Pure Storage community for the latest version.

Enjoy! As always feedback on these documents is ALWAYS welcome.

UNMAP Block Count Behavior Change in ESXi 5.5 P3+

I recently was doing some troubleshooting for a customer that was using my UNMAP PowerCLI script and discovered a change in ESXi 5.5+ UNMAP. The issue was that the script was taking quite a while to complete. After some logic optimizations and increasing timeouts the script was sped up a bit and less timeout errors occurred, but a bunch of the UNMAP operations were still taking a lot longer than expected. Eventually we threw our hands up and said it was good enough. A bit more recently, I was testing a 3rd party UNMAP tool and ran into similar behavior so I dug into it a bit more and found some semi-unexpected changes in how UNMAP works, specifically the behavior when leveraging non-default block iteration counts. Continue reading “UNMAP Block Count Behavior Change in ESXi 5.5 P3+”

Pure Storage ASCII Art Contest

I wanted to let people know about a fun contest we just started today at Pure Storage for our customers to get involved in that was the brainchild of my esteemed coworker Barkz. In our GUI (also visible when you login to the array CLI) there is a login banner you can create to greet you, or warn you as the case may be. The banner is just an ASCII text box, but we have had a few customers create some cool banners in the form of ASCII images.

Host Connectivity Reporting Changes and IO Balance: Part 2

In Part 1 of this two-parter, I spoke about our new CLI-based I/O Balance tool customers can use to verify that the I/O coming from their host is balanced across the paths that are configured.

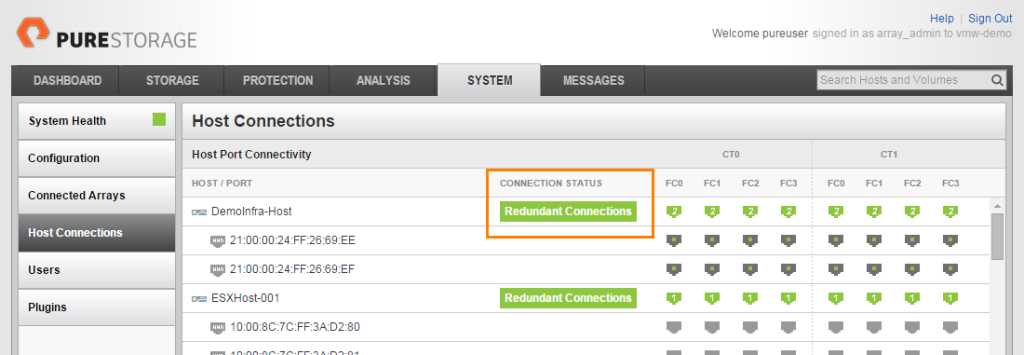

We also have made some enhancements in the GUI for host connectivity reporting. There has been a screen inside the System tab of the FlashArray GUI that reports on the redundancy of host connections to the FlashArray for awhile now:

Continue reading “Host Connectivity Reporting Changes and IO Balance: Part 2”

Continue reading “Host Connectivity Reporting Changes and IO Balance: Part 2”

The Pure Storage FlashArray vROPs Adapter v1

The Pure Storage Management Pack for VMware vRealize Operations Manager version 1 is now out! Download it here. This is the latest in our aggressive 2015 roadmap of VMware management integration, whether that be integration point that are new or updated.

So first, what is a management pack? A management pack is a plugin of sorts that can be installed into vRealize Operations Manager (vROPs) that provides context and relationships to existing objects inside vROPs. How these objects are related depends on what the pack represents. In the case of Pure Storage, the pack relates VMware objects, such as VMs and datastore to volumes on a particular FlashArray. This in addition to FlashArray host groups and hosts. Continue reading “The Pure Storage FlashArray vROPs Adapter v1”

FlashArray //m and VMware Integration–What do you need to know?

Last week Pure Storage introduced the latest iteration in the FlashArray product line: the FlashArray //m. While Pure Storage has traditionally focused on software innovation from a technical standpoint, we decided that the only way to stay ahead of (and lead) the curve was to innovate in the hardware realm as well. Therefore, for the last few years, development on producing a hardware platform that could keep up with compute and storage speed and capacity leaps has been at full tilt. This produced the brand new FlashArray //m.

Continue reading “FlashArray //m and VMware Integration–What do you need to know?”

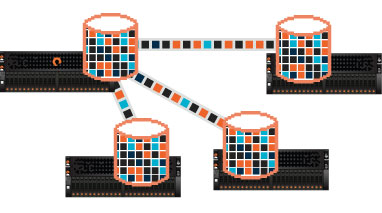

Site Recovery Manager 6 and Storage DRS Tagging: Part II–FlashArray SRA

The first post in this two-part series was about the general new feature of VMware vCenter Site Recovery Manager 6.0 and Storage DRS. Read about it here. In this post, I am going to take a bit more of a specific look at this when it comes to the FlashArray and our Storage Replication Adapter (SRA).

Continue reading “Site Recovery Manager 6 and Storage DRS Tagging: Part II–FlashArray SRA”

Join Pure Storage on June 1st for the future of Flash!

Non-technical post here. Some information though for an upcoming event:

Beginning June 1st, Pure Storage will be hosting events in 50+ cities all over the world to make some exciting announcements concerning the future of flash in the datacenter as well as a sweet new product launch. We’ll be talking about a vision that is transforming the storage industry and more. I highly recommend registering for one in a city near you!

Continue reading “Join Pure Storage on June 1st for the future of Flash!”

XCOPY Improvement in vSphere 6.0 for Thin Virtual Disks

Here is another “look what I found” storage-related post for vSphere 6. Once again, I am still looking into exact design changes, so this is what I observed and my educated guess on how it was done. Look for more details as time wears on.

***This blog post really turned out longer than I expected, probably should have been a two parter, so I apologize for the length.***

Like usual, let me wax historical for a bit… A little over a year ago, in my previous job, I wrote a proposal document to VMware to improve how they handled XCOPY. XCOPY, as you may be aware, is the SCSI command used by ESXi to clone/Storage vMotion/deploy from template VMs on a compatible array. It seems that in vSphere 6.0 VMware implemented these requests (my good friend Drew Tonnesen recently blogged on this). My request centered around three things:

- Allow XCOPY to use a much larger transfer size (current maximum is 16 MB) a.k.a, how much space a single XCOPY SCSI command can describe. Things like Microsoft ODX can handle XCOPY sizes up to 256 MB for example (though the ODX implementation is a bit different).

- Allow ESXi to query the Maximum Segment Length during an Extended Copy (XCOPY) Receive Copy Results and use that value. This value tells ESXi what to use as a maximum transfer size. This will allow the end user to avoid the hassle of having to deal with manual transfer size changes.

- Allow for thin virtual disks to leverage a larger transfer size than 1 MB.

The first two are currently supported in a very limited fashion by VMware right now, (but stay tuned on this!) so for this post I am going to focus on the thin virtual disk enhancement and what it means on the FlashArray.

Continue reading “XCOPY Improvement in vSphere 6.0 for Thin Virtual Disks”