In my last post, I walked through configuring ActiveCluster and your VMware environment to prepare for use in Site Recovery Manager.

Site Recovery Manager and ActiveCluster Part I: Pre-SRM Configuration

In this post, I will walk through configuring Site Recovery Manager itself. There are a few pre-requisites at this point:

- Everything that was done in part 1.

- Site Recovery Manager installed and paired

- Inventory mappings in SRM are complete (network, folders, clusters, resource pools etc).

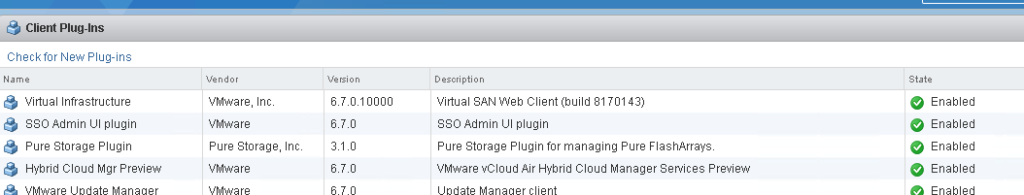

- Downloaded and installed the FlashArray SRA 3.x or later on both SRM servers.

Continue reading “Site Recovery Manager and ActiveCluster Part II: Configuring SRM”