I have quite a few PowerShell scripts these days and I run a bunch of them quite often. All of my scripts log information to a file so I can see what happened but I decided I wanted to log them into something that could help me analyze or quickly review the data. Something better than looking at a bunch of text files. One of my favorite products, VMware Log Insight was the first thing I thought of. The ingestion REST API makes the most sense. Took a little time to figure out the best way to do it, but it’s working great now. To the details!

The first thing to note, is that there is no PowerShell module (or PowerCLI cmdlet) that I am aware of that works directly with Log Insight.

VMware Log Insight also has a REST API service, not just for getting information out of Log Insight, but also for putting information into it. This is how the Windows and Linux Log Insight agents work. And this is a very useful tool. Which is where PowerShell comes in. PowerShell has the ever-useful command Invoke-RestMethod that allows you to make REST calls to a REST target. Granted, almost any language can make REST calls, but PowerShell is my friend, so let’s focus on that.

Introduction to the Log Insight Ingestion REST API

The Log Insight ingestion API is documented here officially but there is also a good blog post by Steve Flanders here that helps explain how to use it.

In short, you can make POST REST calls to inject events/information into Log Insight that standard agents or syslog sources cannot easily do. A message looks like this:

{"messages": [{

"fields": [

{"name": "VMFS", "content": "MyDatastore"},

{"name": "ESXi", "content": "esxiserver1"}

],

"text": "A new datastore has been created.",

}

]

}

You can keep adding lines in the “fields” column as you see fit with more information. They each need a “name” item and a “content” item. Text can be the message you want to appear describing the whole message. More on that in a bit.

An important part here is that this all needs to be formatted in a JSON (JavaScript Object Notation) format. Which is typical of REST. The JSON body gets sent to a base URL. The base URL looks like:

http://loginsight.csgvmw.local:9000/api/v1/messages/ingest/624a9370

The “loginsight.csgvmw.local” is my Log Insight server and the “:9000” is the REST service port. The “/api/v1/messages/ingest/” is the rest of the URL required to reach the REST API service. The final part is the UUID of the source sending the message. You can make this hex value of some sort, or just standard numerical identifier, but a best practice is following the UUID standard. I prefer to make it something I recognize, maybe an IP or a OUI.

There is more information on this if you want details. I’d refer back to the links to the documentation above. Anyways… To PowerShell!

Building a Log Insight REST API call with PowerShell

While REST uses JSON, most objects and variables in PowerShell are not stored like that. PowerShell commonly uses arrays or hash tables or whatever. So these need to be converted into JSON. So let’s take the situation where I am running VMFS UNMAP and I want to report back to Log Insight some info:

- The VMFS volume name

- The NAA of the device hosting that VMFS

That information is all easy enough to get. But how do we store it in the proper JSON format for the REST call? Well let’s look at the architecture of the required JSON format of Log Insight. A very simple version.

{"messages":

[{

"text": "VMFS UNMAP has been run.",

"fields": [

{

"name": "Datastore",

"content": "vmfs"

}

]

}]

}

The top level “messages” is a hash table. The next level which consists of “text” and “fields” is another hash table. So “messages” is a hash table that contains a hashtable. The next level is the items in “fields”. This is an array (a list of items). Each one of the items in the array is another hash table (each has a “name” item and a “content” item.

Hash tables are created in PowerShell like so:

$hashname = @{}

Arrays are created in PowerShell like so:

$tablename = @()

Note the difference in parentheses types. So let’s build a REST call.

First I need to enter my information. VMFS name and NAA. My VMFS name (“MyDatastore”) is stored in a string variable name $vmfs and my NAA (naa.624a9370a847c250adbb1c7b00011aca) is stored in a variable named $naa. Easy enough. According to the information above, these both need to be entered into a hash table with “content” and “name”. So let’s create a hash table for each.

$restVmfs = [ordered]@{

name = "VMFSfield"

content = $vmfs

}

$restNaa= [ordered]@{

name = "NAAfield"

content = $naa

}

So I have two hash tables now, one called $restVmfs and one called $restNaa. I am naming the VMFS field VMFSfield and the NAA field NAAfield. Also note, the [ordered] addition. This makes sure the fields are always in the stated order.

Now I need to include this information into the rest of the properly formatted JSON body for the REST call.

So the “fields” portion is an array, so let’s include these two fields in an array. Let’s call the array $fields.

$fields = @($restVmfs,$restNaa)

Note the parentheses difference again. If you want more fields, just create them like above and add them into this array as comma separated. For ease of explanation I will stick with the two fields.

Next is where it is a bit tricky. We need to create and combine the “text” item with “fields” items. Then we need to put those both inside a hash value for “messages”. We can do this all at once:

$restcall = @{

messages = ([Object[]]($messages = [ordered]@{

text = "Completed a VMFS UNMAP on a FlashArray volume."

fields = ([Object[]]$fields)

}))

}

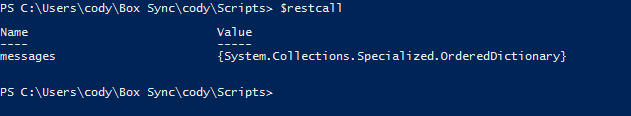

I am creating two variables at once. Both hash values. One is hash table called $messages. This is combining my new “text” item with my previously created object called $fields into a hash table. In the same operation, I am adding that new object “$messages” into a new hash table called $restcall. I have now built my REST body! Well kinda… The print out of $restcall looks like this:

Not very nice looking :(. Well it is because it is a collection of hash table objects. It is not JSON yet. Well, PowerShell to the rescue!

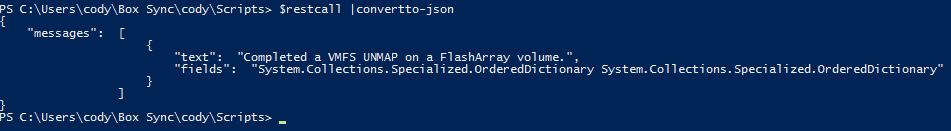

PowerShell has two handy-dandy commands. convertTo-Json and convertFrom-Json. The former is what we want. So let’s take that variable called $restcall and convert it to JSON like so:

$restcall |convertto-json

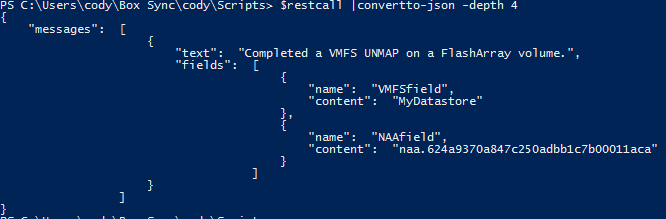

Still has weird fields. The reason is because convertTo-Json is not recursive. By default. Thankfully, there is a parameter called “-depth”. This allows it to be recursively deep to the proper level. The depth we need is 4. So let’s try:

$restcall |convertto-json -depth 4

That looks good to me! Very familiar, right? Let’s combine these past two to make it tighter:

$restcall = @{

messages = ([Object[]]($messages = [ordered]@{

text = "Completed a VMFS UNMAP on a FlashArray volume."

fields = ([Object[]]$fields)

}))

} |convertto-json -depth 4

This will create the $restcall in JSON format immediately.

Now to execute the REST API call…

Executing a Log Insight REST API call with PowerShell

Okay now we have a variable called $restcall properly formatted in JSON. Yay! What next? Well, build the URL. You can hard code this or whatever. I created mine like this

$loginsightserver = "loginsight.csgvmw.local"

$loginsightagentID = "624a9370"

$resturl = ("http://" + $loginsightserver + ":9000/api/v1/messages/ingest/" + $loginsightagentID)

This allows me to easily change the base Log Insight server and the UUID. Notice my UUID is the Pure Storage FlashArray OUI. That’s how I roll.

The REST URL is not the only thing you need. The REST call needs to be made with Invoke-RestMethod. This is the easy part of all of this. Just run this:

Invoke-RestMethod $resturl -Method Post -Body $restcall -ContentType 'application/json'

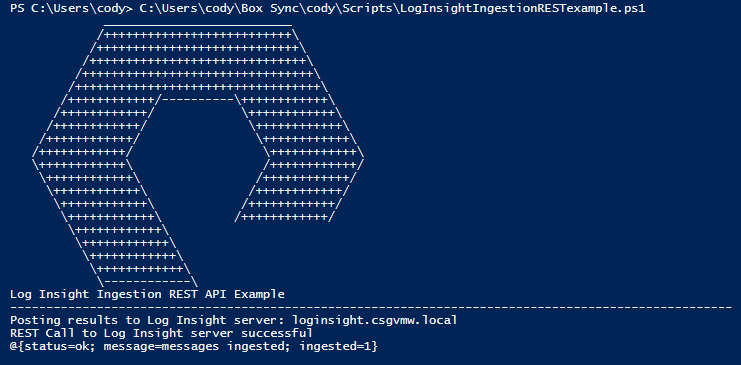

Now the REST call is sent to Log Insight! You will get a response that looks like this if it is successful:

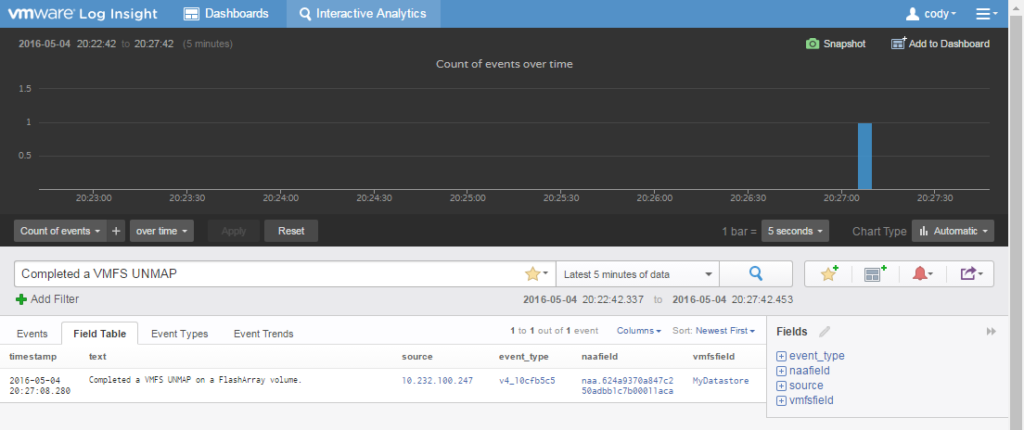

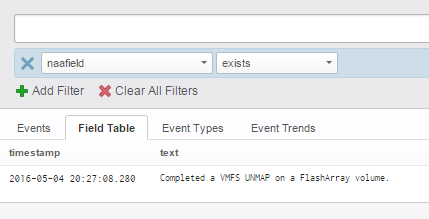

If we go to Log Insight and query for something in this message, like our text field:

We see our information there! NAA and VMFS. We can even query by one of our items (fields) like naaField.

Cool stuff! I am definitely putting this is my scripts now. To see a larger example of this process in a ps1 file with some error catching etc. take a look at this example on my GitHub.

https://github.com/codyhosterman/powercli/blob/master/LogInsightIngestionRESTexample.ps1

There is a PowerShell module to use the Log Insight Query API.

See http://www.lucd.info/2016/04/29/loginsight-module/

Hi, do you know is there a log insight API for automated deployment and installation of content paks?

Thanks

Looking at the latest API doc I don’t see a way to do it that is at least documented. I can poke around some more though

Is it possible to run the powershell script every hour to grab performance data (cpu memory lane and disk)? store the data and then graphically display the data in a dashboard?

Sure! The easiest option would be to run the PowerShell script as a scheduled task in Windows. Task Scheduler will allow it to be run on any interval you want. That is probably the simplest option. vRealize Orchestrator could do it too. But that would be a little more work.