vSphere 6.7 core storage “what’s new” series:

- What’s New in Core Storage in vSphere 6.7 Part I: In-Guest UNMAP and Snapshots

- What’s New in Core Storage in vSphere 6.7 Part II: Sector Size and VMFS-6

- What’s New in Core Storage in vSphere 6.7 Part III: Increased Storage Limits

- What’s New in Core Storage in vSphere 6.7 Part IV: NVMe Controller In-Guest UNMAP Support

- What’s New in Core Storage in vSphere 6.7 Part V: Rate Control for Automatic VMFS UNMAP

- What’s New in Core Storage in vSphere 6.7 Part VI: Flat LUN ID Addressing Support

In vSphere 6.5, a new version of VMFS was introduced–VMFS-6. A behavior that many noted was that it was not always the default option for their storage. ESXi (unless told otherwise) would default to formatting some storage with VMFS-5. So when you installed ESXi, the default datastore that gets created would be VMFS-5.

The issue with this was that VMFS-5, was well not VMFS-6. Not automatic UNMAP etc. Furthermore, there is no upgrade path besides deleting the file system and then reformatting with VMFS-6. This of course was a bit annoying for many.

The reason for this, or at least the explanation for the behavior has to do with the storage sector size.

VMFS-6 introduced support for storage that has 4K sector size–the main change is that all metadata on VMFS-6 would be aligned to 4K boundaries. There wasn’t direct support for 4k native storage yet in ESXi, but VMFS-6 was setup for it.

In vSphere 6.5, 4K storage would be listed as sector format of 512e (512-byte emulated). Storage that has 512 byte sectors would show up as 512n (512-byte native).

In vSphere 6.7, support has been extended to 4K storage, so that now it shows up as 4Kn SWE for its sector size. This means “4 KB native Software Emulated”. In other words, a software emulation layer exists so applications and VMs that need or were designed for 512 sector size and continue to run. In other words, VMDKs still report back a sector size of 512 bytes, even if the storage below is 4K.

Support for this storage is still somewhat limited:

- Only local SAS, SATA HDDs are supported

- Must use VMFS6, and

- Booting ESXi from 4Kn drives requires UEFI boot, not traditional BIOS.

- 4Kn SSD, NVMe, and RDM to VMs are not supported

- Third party multi-pathing plugins are not supported with 4Kn.

My understanding though, and certainly verify this with VMware/your storage vendor. Is that if you have SAN storage with 4K sector size, it is supported, but it will not show up with 4Kn SWE, but instead 512e.

So your question might be now, okay so this doesn’t really mean anything to me. And maybe not. But one subtle thing that has changed between 6.5 and 6.7 around all of this is the VMFS version defaults.

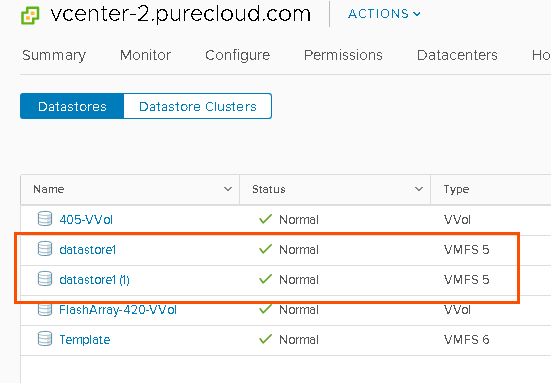

As I mentioned before, unless the storage was 512e, the default would be VMFS-5. FlashArray storage is 512n, so my automatic datastores are all VMFS-5 in vSphere 6.5:

The main reason for this was that VMFS-6 was version that was required for 512e (4kn). So that storage HAD to use VMFS-6. 512n has been around for a long time and works great with VMFS-5. Most companies want to test new file systems first, and so VMware played it safe and defaulted to VMFS-5 for storage that still works with it even though 512n works perfectly fine with VMFS-6.

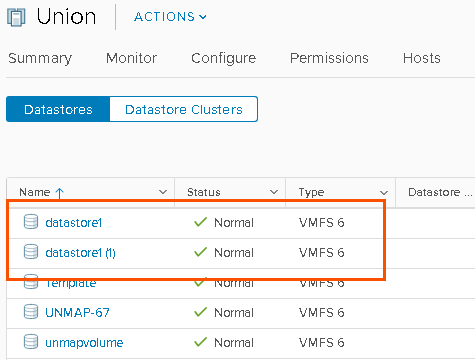

Well now that 6.7 has come out and plenty of testing and usage of VMFS-6 has occured, VMware moved forward and now defaults all storage to VMFS-6. So boot volumes, or scripted formats of storage with VMFS will now default to VMFS-6 unless otherwise specified.

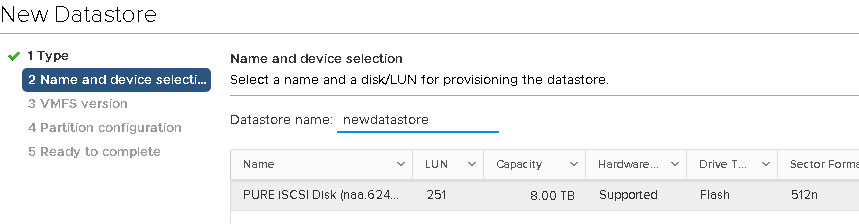

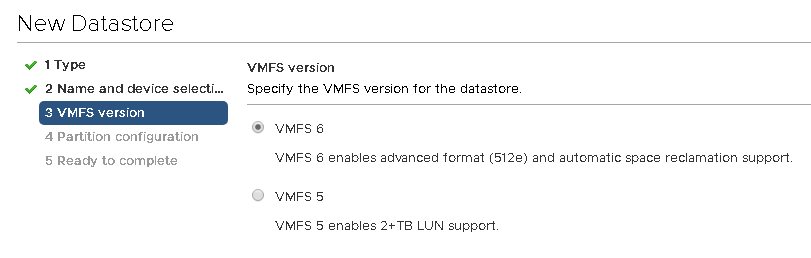

You can also notes this in the new storage wizard, VMFS-6 is now the default option for 512n storage too.

5 Replies to “What’s New in Core Storage in vSphere 6.7 Part II: Sector Size and VMFS-6”