Virtual Volumes change quite a lot of things. One of these is how your storage volumes are actually connected. This change is necessitated for two reasons:

- Scale. Traditional ESXi SCSI limits how many SCSI devices can be seen at once. 256 in 6.0 and earlier and 512 in 6.5. This is still not enough when every virtual disk its own volume.

- Performance. Virtual Volumes are provisioned and de-provisioned and moved and accessed constantly. If every time one of these operations occurred a SC SI rescan was required, we would see rescan storms unlike this world has ever witnessed.

So VMware changed how this is done.

Protocol Endpoints

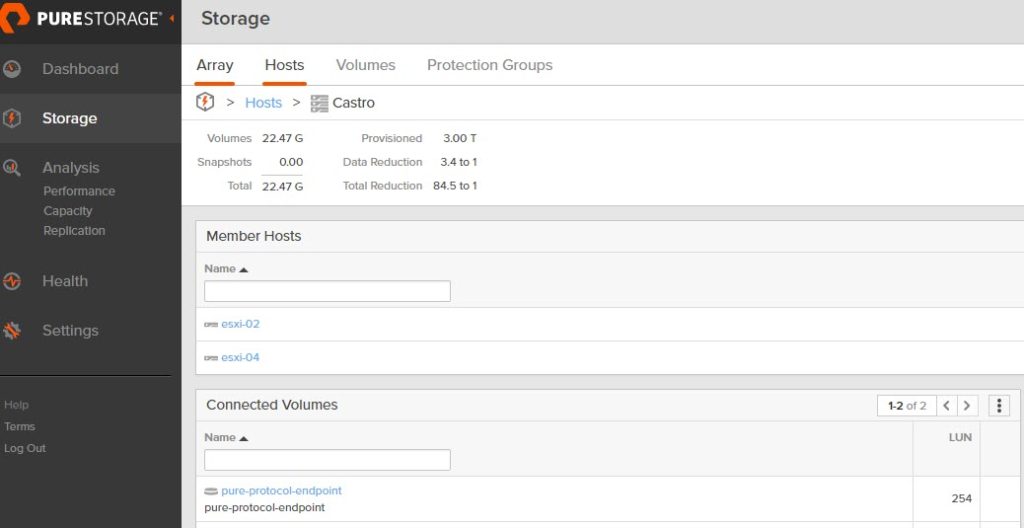

A fancy name for a simple thing. This is a special device on an array that has special VPD information that says that it is a protocol endpoint. This is of zero (or minimal) capacity and is the only volume you need to manually provision to an ESXi host. Connect it on your array, (however that is done on your array) and then rescan the ESXi hosts.

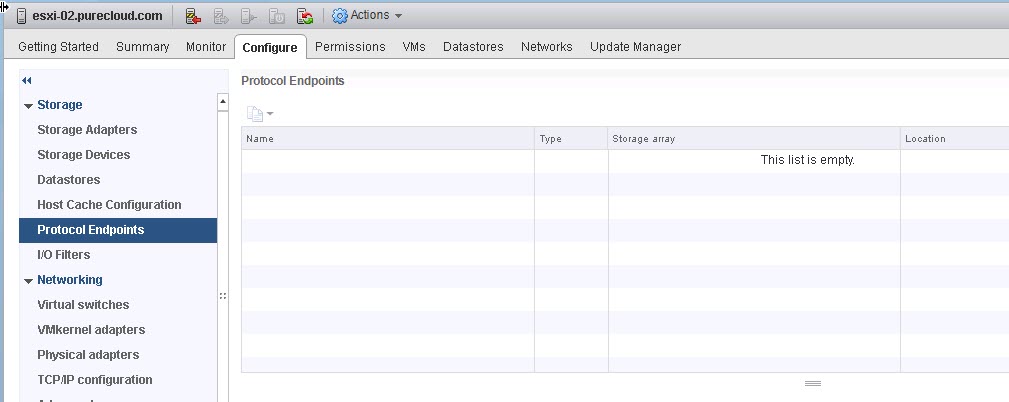

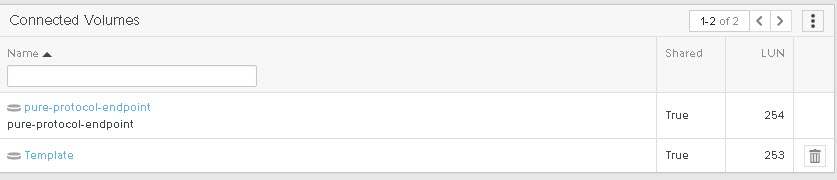

Below I have an ESXi host, which currently has no protocol endpoints

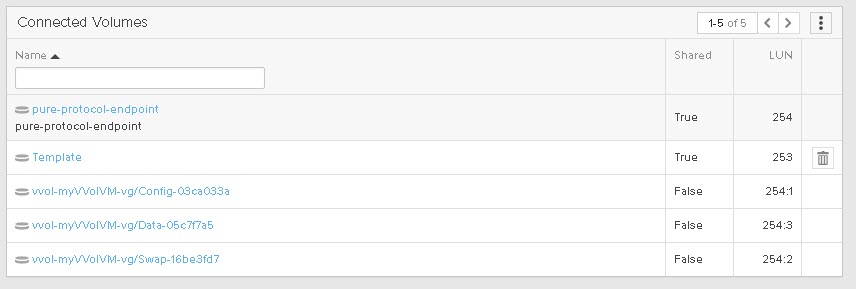

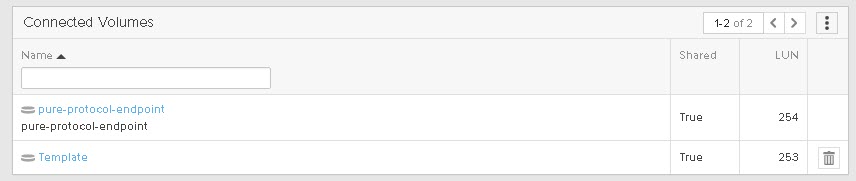

Then from my array I add my PE:

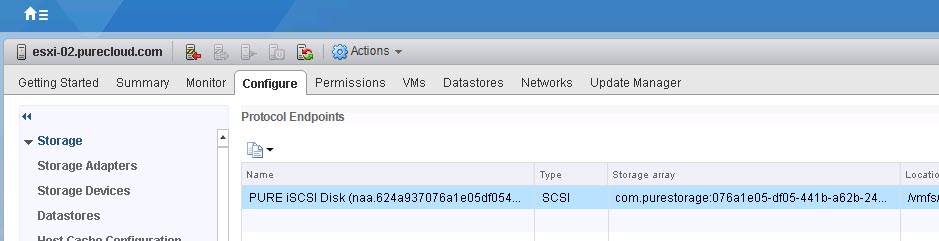

Then rescan your ESXi host. Now, you might notice that even though the PE is presented it does not show up as a PE in the “protocol endpoints” host view in the GUI until a storage container (a VVol datastore) is actually mounted and using it.

Even though the vSphere Web Client doesn’t report the PEs that are currently un-used, ESXi does actually see it as a PE if you use the CLI:

esxcli storage core device list --device=naa.624a937076a1e05df05441ba000253a3 |grep "PE" Is VVOL PE: true

Frankly, I am not sure if this is a Web Client feature or a bug. It is useful I guess in the sense that it shows the PEs that are actually in use by that particular host. So my guess is the former option.

VVol Bindings

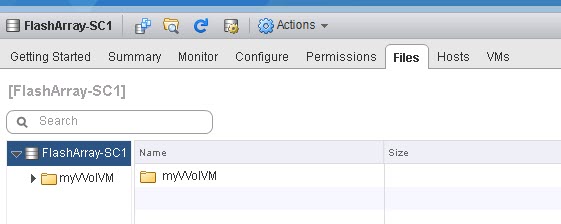

So now that we have a protocol endpoint, how are volumes connected? Well when a virtual machine is created or a new virtual disk is added to a VM (that is configured to be a virtual volume), one or more virtual volumes are created. Every VM has a config VVol (holds the configuration information), and every virtual disk is one virtual volume.

When you create a new virtual volume and it is needs to be accessed by a VM, the VVol is bound to a protocol endpoint. What “bound” means is that it is presented to a host as a sub-lun through that protocol endpoint. A sub-lun is a unique connection of a VVol through a PE to a host–in other words, even if a PE is shared, it does not mean that all hosts that see the PE can see the VVol.

A VVol binding goes through the PE, so if the PE is LUN ID 255 for a given host and the VVol is sub-lun 10, the VVol LUN ID for that VVol for that host is 255:10.

The important thing to note is that when VVols are created they are not automatically connected to hosts and stay that way–they are connected only when needed, and disconnected automatically when they no longer are. This is one of the nice points of flexibility/automation that comes with VVols. So they question is, when are they bound?

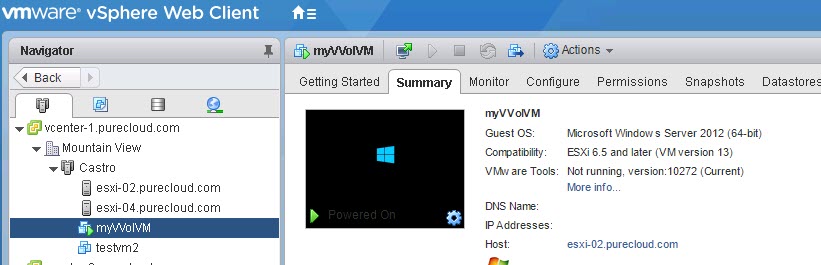

Creating a VM

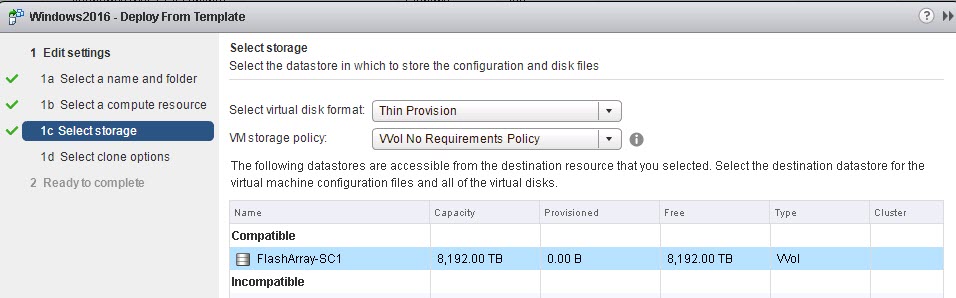

At this point though there are no VVols. So let’s provision a virtual machine.

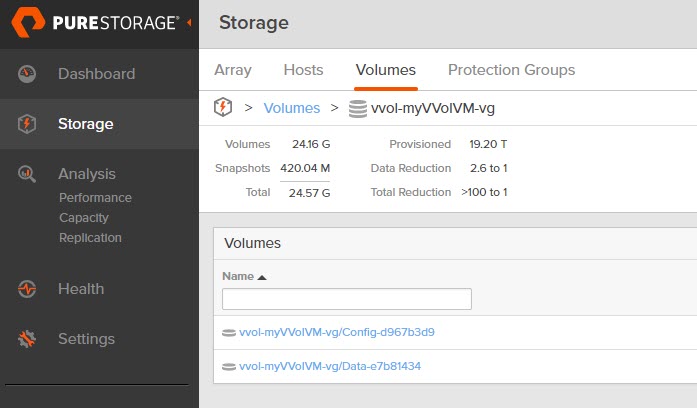

This will then go and create the VM and the respective VVols on the array. This VM has only one virtual disk, so only one data VVol will be created. Every new VM also gets a config VVol when the VM is created, which holds the configuration information of the VM itself (virtual hardware etc). I will go into more detail on what a config VVol actually is in a later blog post.

VVols do get bound when the VM is created, but only shortly.

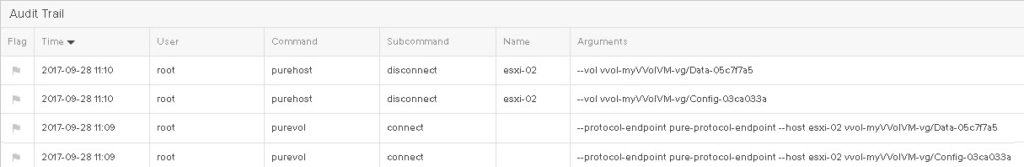

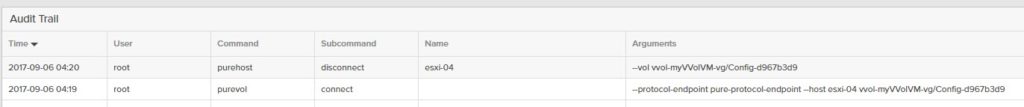

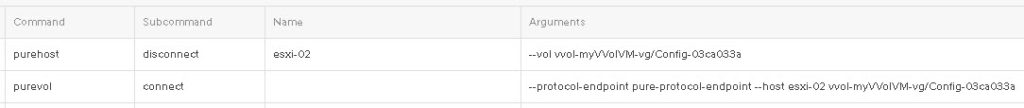

As we can see in the audit log, my two VVols (one config, one data) were connected briefly and then disconnected:

The config VVol has no remaining binding once the VM has been created.

This makes sense, neither objects need to be read by any host at this point. This will stay this way unless you do something to the VM or one of its objects need to be accessed.

Reading the Config VVol

Well, what about if you need to look at the config information of a VM even when it isn’t powered-on? What happens then? Well first off, a config VVol is basically a mini-VMFS that is hidden directly from view. A config VVol is associated with a VVol datastore and can then be read when you navigate the VVol datastore. Just like VMFS. The main difference is that a VVol datastore is not a physical object, instead it is just an abstraction of VVol pointers and config VVol file systems.

Datastore browser

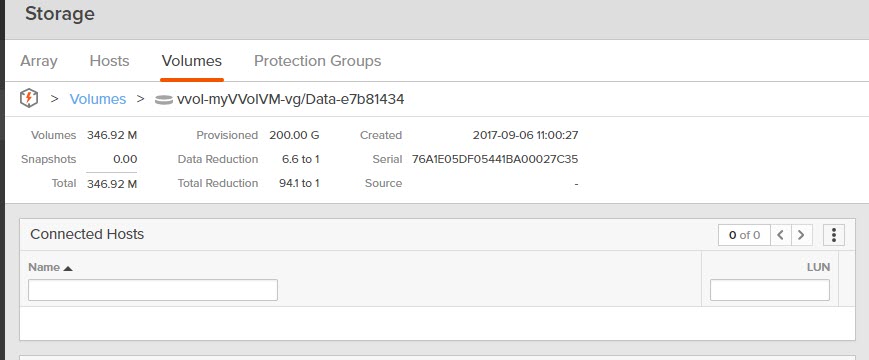

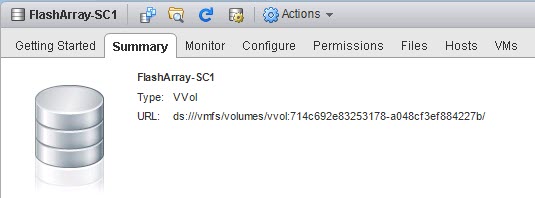

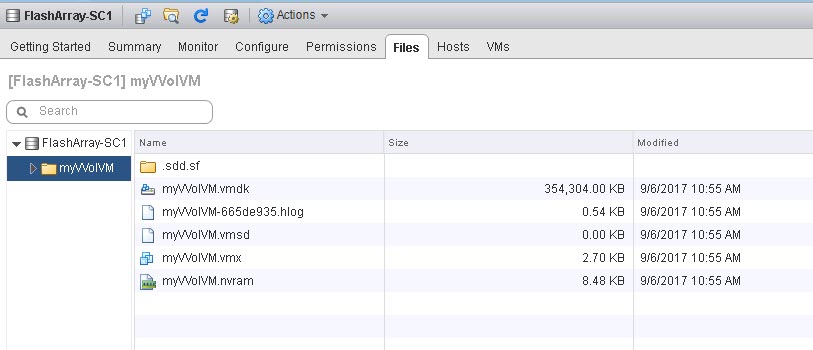

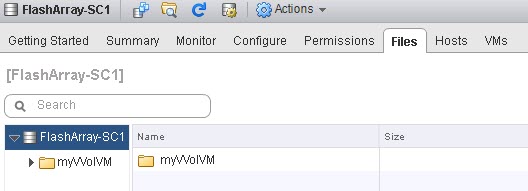

So, I have a VVol datastore:

If I click on files, it shows all of my VM folders (I just have one).

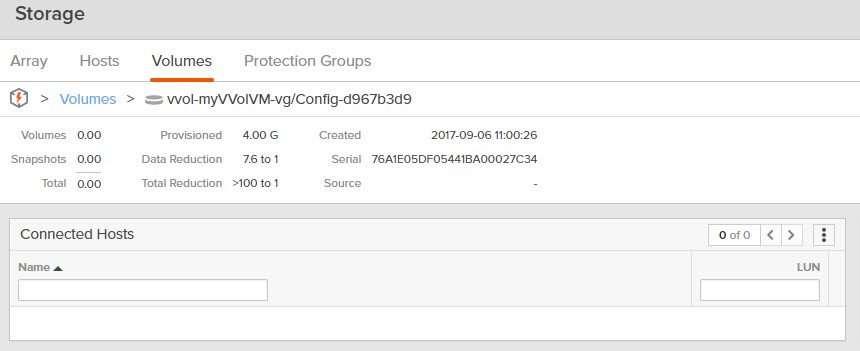

A folder is essentially a reference to a config VVol. The config VVol is still unbound.

If I click on the folder:

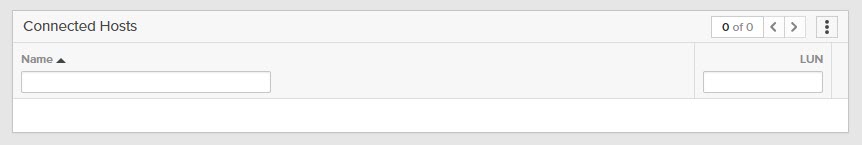

It is now bound:

It is only bound to one host, so vCenter chooses a host to actually leverage to query the actual VVol datastore, this is no different than with VMFS conceptually. As soon as you back out of it, and there is no “handle” open to the VVol datastore the binding is removed. The config VVol is no longer needed.

We can prove this out with the array audit logs:

A binding through the protocol endpoint and then an unbind as soon as I closed the file browser (well almost immediately, within it seems 15-30 seconds).

Shell change directory

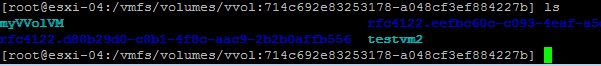

A similar, but slightly different behavior can be exhibited if you SSH into an ESXi host and navigate to the VVol datastore and cd into the folder.

When I SSH into an ESXi host and then CD into /vmfs/volumes/<name of VVol datastore>, nothing is bound:

![]()

But if a run “ls” I will see all of my config VVols get bound:

No data VVols. But after a short time, the config VVols are unbound automatically.

So it seems this behavior is slightly different between vCenter browse datastore and with shell. Browse datastore causes no bindings when you list the highest level of the VVol datastore directory, but shell binds all config VVols then unbinds them shortly after.

If I CD into a VM directory that config VVol is bound and stays that way until I CD back out.

Pretty dynamic–really nice as it makes sure only things are presented that are actually needed.

Edit VM settings

If I log into vCenter and click edit VM settings when the VM is off, I see that no bindings occur. This is due to the fact that vCenter doesn’t need to access the VMX file, it looks at what is in memory.

Though, if I make a change I will see a bind then an unbind. This is because you made a change and it needs to commit that change to the VMX file on the config VVol. It uses the host that the VM is registered to for the binding operations.

Power-On a VM

The next step is powering a VM on. What gets bound?

Well everything. The config VVol, the data VVols and also a swap VVol gets created which is bound to the host that runs it.

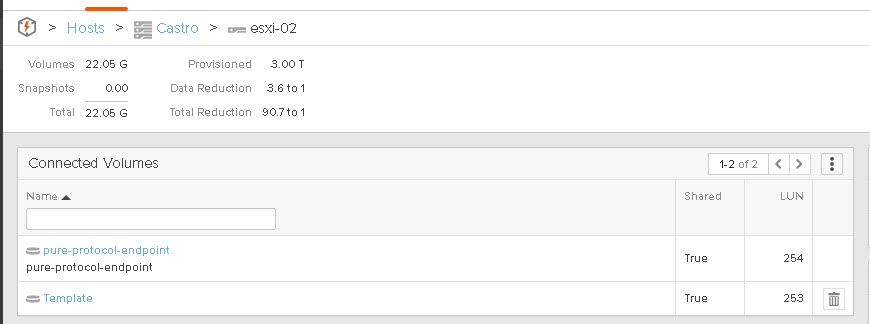

Powered-off, no bindings:

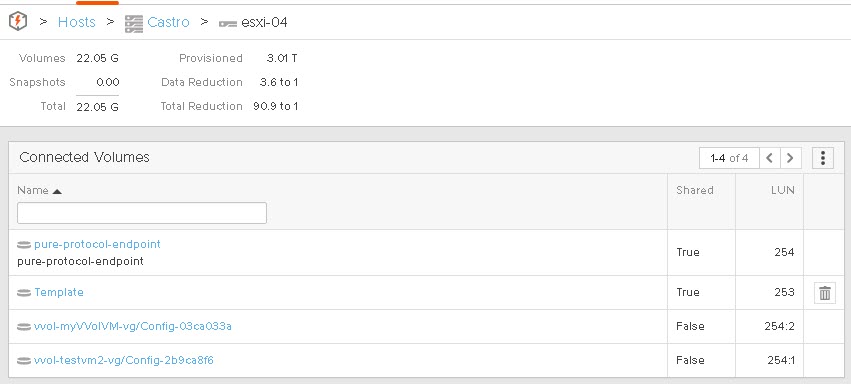

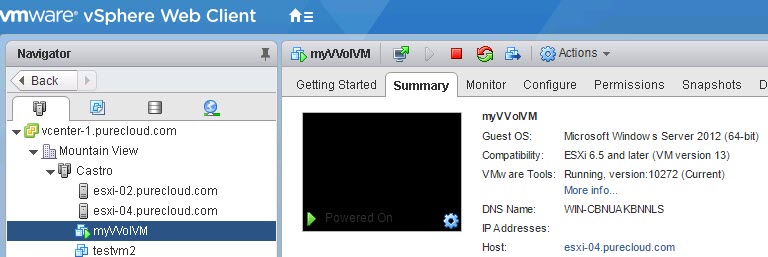

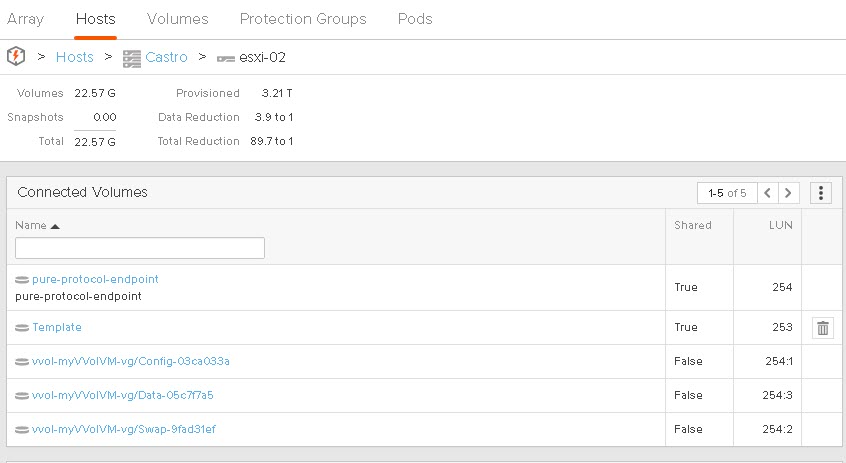

All of the VMs VVols are bound to ESXi-02:

When you power-off the VM, all of the bindings go away (and the swap VVol is deleted entirely).

vMotion

So what about if you move hosts?

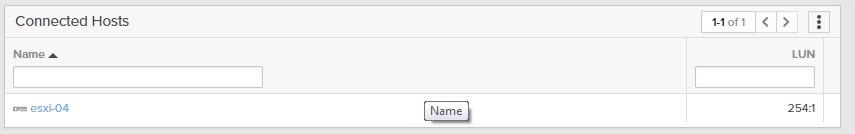

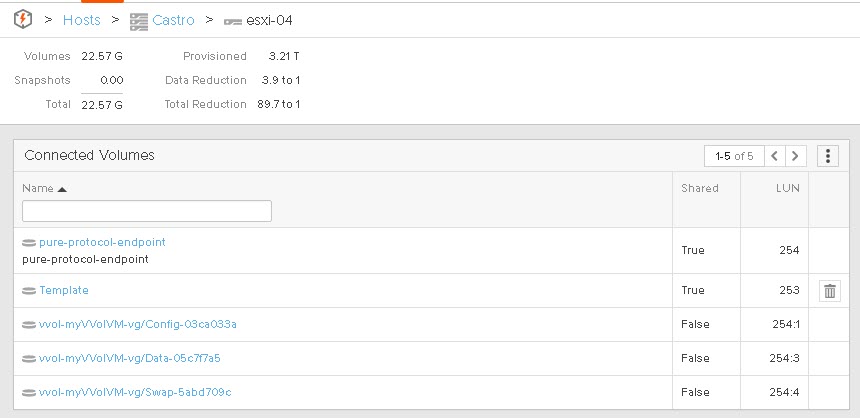

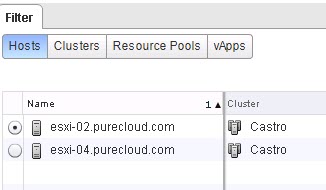

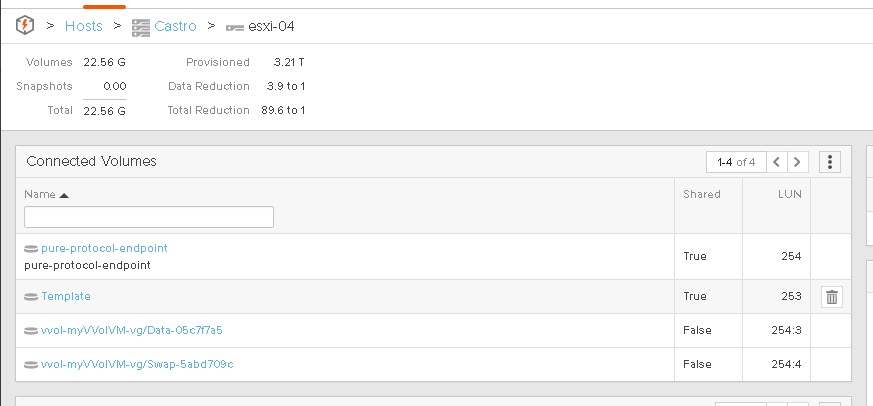

Currently my VM is bound to esxi-04 (and running on it).

Now if I choose esxi-02 and vMotion it, you can see the bindings appear on esxi-02 now:

The config VVol is bound explicitly at the end of the vMotion operation from the original host:

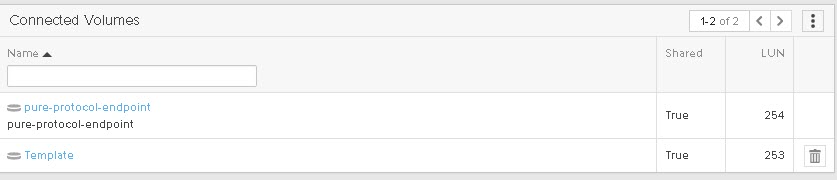

Then shortly thereafter, the data and swap VVols are unbound too from the original host:

Conclusion

All of this is really behind the scenes stuff, which is nice for users. You don’t need to worry about provisioning and de-provisioning or rescans etc. Simple, dynamic, automatic. Good stuff. I will probably revisit this topic as I dig into more advanced scenarios.

Nice write up Cody! I think most people overlook how much binding/unbinding is happening in a VVols environment. Definitely highlights how the VASA provider needs to be implemented as high available as possible.

Or…

Use Tintri for your VMs and skip this altogether