A question recently came up on the Pure Storage Community Forum about VMFS capacity alerts that said, to paraphrase:

“I am constantly getting capacity threshold (75%) alerts on my VMFS volumes but when I look at my FlashArray volume used capacity it is nowhere near that in used space. What can I do to make the VMware number closer to the FlashArray one so I don’t get these alerts?”

This comment really boils down to what is the difference between these numbers and how do I handle it? So, let’s dig into this.

First, let’s look at the basics. Scroll to the bottom if you just want to see my recommendations.

What is VMFS Capacity

What VMFS capacity means. At the basic level, VMFS capacity is tracked by looking at how much is logically allocated on the VMFS. This has nothing to directly do with how much the guests have actually written, or really even what has been zeroed. In short–how many blocks have been allocated by a virtual disk (also including ISOs, VMX files etc–but generally the vast majority of this space is comprised of VMDKs and swap files).

What is actually reserved depends on what types of virtual disks you are using as well. If it is thick (EZT or ZT), it will reserve all of the space, if it is thin it will only reserve/allocate what the guest has actually written to. So using thick type virtual disks will fill up your VMFS faster as all of that space is allocated immediately, with thin, the filling is more gradual as space is only allocated as the guest needs it.

What is FlashArray Volume Capacity

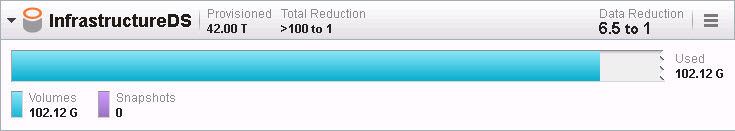

Okay, now that we understand how VMFS tracks space, how does the FlashArray track it for a particular volume? Well there are a couple things that are tracked (unique space, snapshots), but let’s look at unique space. The below is a screenshot of the capacity of the volume hosting the previously mentioned VMFS.

The usage under the “volumes” label is what the FlashArray reports as unique space for that volume. So how much is “used” by the data stored on that volume. In the case above, the volume is uniquely using using 102 GB on the FlashArray. So what does uniquely mean?

It means the following:

After compression and deduplication how much capacity is that volume consuming from the FlashArray that is not shared with any other volume.

Let me explain this with a very simple example. Let’s say we two volumes on a FlashArray. Let’s say a host writes the following four blocks (I mean block as a generic term for “some data”) of data to volume “vol1”:

1001

1101

1101

1110

That host writes three blocks to volume “vol2”:

1100

0111

1110

So what does each volume now have for unique space? So “vol1” has some internal dedupe, it has the block 1101 twice, so that will be reduced to just one instance. The volume “vol2” does not have any internal dedupe–all three blocks it wrote are different. But both “vol1” and “vol2” have the block 1110. So that will be only stored once. Now how is it accounted for?

The unique volume used capacity of “vol1” will be a total of two blocks: 1001 and 1101. The other volume, “vol2” will also have a unique volume used capacity with a total of two blocks as well. 1100 and 0111.

So “vol1” unique space is 2 blocks:

1001

1101

and “vol2” unique space is 2 blocks:

1100

0111

Who then accounts for the 1110 block? Neither volume.

Neither volume will account for the block of 1110 because neither volume can claim sole ownership of that data–they both have meta data pointers to that block. This block will therefore be accounted for by the array-level shared space. The array-level shared space accounts for any data that is shared by more than one volume. So the array-level shared space would be 1:

1110

(the two identical 1110s are reduced to one instance).

So what happens if “vol2” now writes 1101? Well “vol1” has 1101 currently as unique space. No one else had written it. Now that “vol2” wrote it, it is no longer unique to “vol1” or “vol2” and it will be moved out of unique space for “vol1” and put in shared space. So now:

So “vol1” unique space is 1 block:

1001

and “vol2” unique space is 2 block:

1100

0111

The array-level shared space would be 2:

1110

1101

So the unique footprint of “vol1” changed due to something that the volume has nothing to do with–the dataset of a different volume. It just so happened that the second volume wrote some data that happened to dedupe with something the first volume already stored.

Okay so what is the takeaway here? The takeaway should be that the unique used capacity reported by the FlashArray does not necessarily have much to do with how much has been written by the host. This metric is really about two things:

- how well this volume is reducing compared to itself and to the dataset currently on the FlashArray

- If the volume is deleted, how much you would get back right away (the volume unique space)

This used capacity has just as much to do with what the host wrote to it, as what hosts have written to other volumes. It should not be a metric that you use to decide when to increase the size of a volume or when you should add a new volume.

NOTE: This behavior might change with other AFAs, so refer to your vendor. Also our actual dedupe engine is far more sophisticated than this, but this shows how conceptually the reporting occurs.

So let’s loop this back to VMFS.

VMFS and FlashArray Volume Capacity Management

Okay, so now that we understand how VMFS tracks data and how the FlashArray tracks used data for a volume. So how does this change monitoring in VMware? Well, really it doesn’t. Who cares what the volume unique spaces says–what matters is what the file system reports as used (in this case, VMFS). The FlashArray just takes that footprint and reduces it as much as possible.

So if there is a difference in what the FlashArray reports for a volume and what VMFS reports, it means one of three things:

- The data set is reducing well! Yay! It is what it is.

- The data set is not reducing well. Oh well. It is what it is.

- There is dead space and you should run VMFS UNMAP. Let’s do it!

- A mixture of all three. Let’s run VMFS UNMAP and see if we get space back!

Let’s look at a few scenarios. Let’s say we have a 100 GB VMFS on a 100 GB FlashArray volume

- FlashArray reports the volume uses 30 GB and VMFS reports 90 GB. This means you have a lot of allocated space that reduces very well–that 90 GB has been either reduced internally in the volume or with data from other volumes. If you need to allocate more VMs or you are getting VMFS capacity alerts and you want them to go away, all that can be done is VMFS can be grown or you can create a new VMFS or you can delete/move some VMs off.

- FlashArray reports the volume uses 90 GB and VMFS reports 30 GB. This means you have dead space and need to run VMFS UNMAP most likely if you want the FlashArray reporting to correct itself. But you can still grow VMs or allocate new VMs because VMFS will just overwrite the dead space. UNMAP is really about housekeeping and making the array reporting accurate instead of actually preventing out of space issues, as hosts will just overwrite the dead space eventually. You can still allocate 90 GB on the VMFS, regardless to how much the array currently reports. What matters is what VMFS says.

- FlashArray reports the volume uses 30 GB and VMFS reports 30 GB too. This means the VMFS isn’t very full and it isn’t reducing at all. Since there is a lot of free space on the VMFS there is a high chance there could be lots of dead space. So it is also possible that it is actually reducing well, but there is a lot of poorly-reducing dead space which makes it seem like it isn’t reducing well. UNMAP could be tried here. It may help reduce the FlashArray volume footprint.

- FlashArray reports the volume uses 90 GB and VMFS reports 90 GB too. This means the VMFS volume is rather full and is not likely reducing well. Since there is very little free space on the VMFS, there is a smaller chance for dead space on the VMFS itself. Though it is possible that there is poorly-reducing in-guest dead space.

Conclusion

Okay, so what? Well in the end this is all very simple. If you VMFS is full, you should think about increasing the volume size to provide more capacity for VMFS to allocate. There is no need to size volumes based on data reduction expectations or resize based on data reduction reality. Size volumes based on how much you expect to allocate. If you are using thick virtual disks, this will be much more up front.

So here are my recommendations. Track only the following things:

- How much free space does your VMFS have.

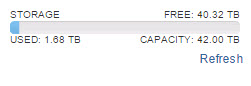

- How much free space does your FlashArray has.

So what should you do:

- Use thin virtual disks. This reduces the need to increase your datastore size all of the time (unless your VMs write new data like crazy). Instead your virtual disks consume only what the guest has written and no more–reducing the up front VMFS allocation penalty. Plus you get all of the additional benefits of thin like in-guest UNMAP support. The downside is that you do need to pay a bit more attention to VMFS capacity full scenarios to allow thin disks to be able to continue to grow. But there is a simple solution to this:

- Use large volumes–there is no capacity penalty to provisioning large volumes on the FlashArray. Since there is no per-device queue limit there isn’t a performance implication on the FlashArray either.

- Use Storage DRS. Create a datastore cluster for your datastores on the same array. Turn on capacity-based moves and make a % threshold that will have SDRS move VMs between the datastores if the % gets too high on one of them. If all of them are too full and SDRS cannot do anything anymore to help, it is time to add storage in some way.

- Use vCenter capacity alerts for VMFS. If your capacity alert for SDRS is 75%, make an alert for the VMFS that triggers at 90% (for instance) that either automatically kicks off a VMFS volume expansion (I blogged about how to do that automatically here) or have it send you an email so you can do it yourself as needed. Or provision a new volume and add it to the datastore cluster so SDRS can re-balance.

- Once the FlashArray itself becomes rather full 75% or more, first run UNMAP (if you don’t do it regularly already)

- After UNMAP if the FlashArray is still relatively full, it is time to buy more SSDs for the array or look at balancing across other FlashArrays you might have.

- Use VVols. With VVols, VMFS goes away and virtual disks are volumes and therefore the FlashArray controls the capacity reporting and management and there is no more disconnect. This is really the best option as VVols have a lot of benefits beyond this.

The final thing is that what matters is how full VMFS thinks it is. Even if the FlashArray volume is empty, if VMFS is fully allocated it needs to be increased or you need another volume. This is axiomatic. Data reduction on the array does not change how VMFS sizing is done. It is the same as before. If you are provisioning thick you need larger volumes now. If you are using thin, you can make them smaller. But why not just make them larger now?