This week I received a question from a customer about some slowness in the vSphere “Add Storage” wizard they were seeing. This is a problem that has occurred over the years quite a few times for a variety of different reasons. VMware has fixed most of them, this latest reason luckily was known and has a relatively simple solution. An option called VMFS.UnresolvedVolumeLiveCheck.

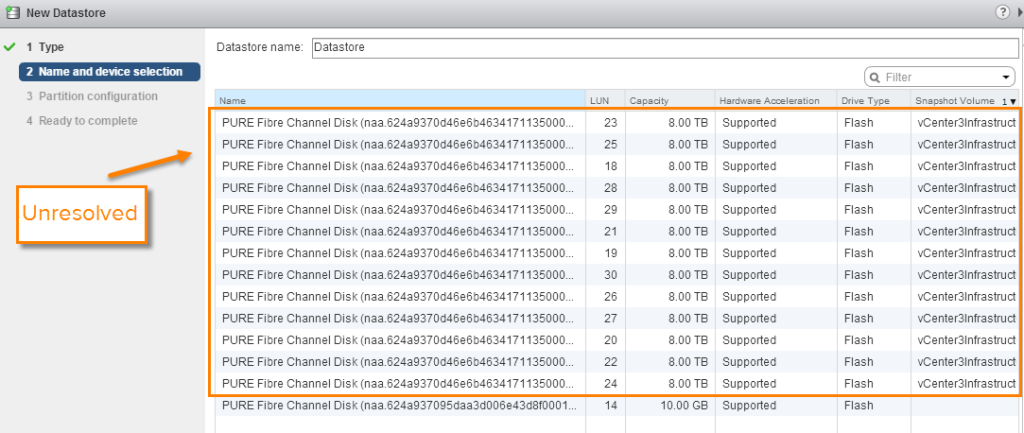

Essentially the problem the customer was facing was that they had a bunch of unresolved VMFS volumes presented to their ESXi hosts (it was a DR site) and when they would launch the Add Storage wizard it would take a long time to populate the available devices. This issue wasn’t present when the unresolved VMFS volumes were not present.

So quickly, what is an unresolved VMFS? This is typically a VMFS volume that has been copied via array based local snapshotting or remote replication. The VMFS is copied to a new device and presented to a host. It is “unresolved” because the volume serial number no longer matches the unique identifier of the VMFS. The UID of the VMFS is associated with the original volume serial, so there is a mismatch. ESXi wants to always have these matched and when a mismatch is found it is not automatically mounted. You can either “force mount” it, keeping the invalid signature (not usually a good idea), or resignature it to give it a new UID based on the new volume’s serial (best practice).

Anyways, I asked the usual questions. The old problem I used to see in my previous job was the presence of unresolved but write-disabled devices. The add storage wizard (and storage rescans) would recognize these volumes and that they have VMFS volumes, but would not recognize they were write-disabled. So they would try to do some simple metadata updates on the VMFS but since it was write-disabled it would fail, but retry it 100s of times per volume. This made these operations take a ridiculous amount of time (30 seconds or so per WD unresolved volume). This was fixed though in later versions of ESXi 5.0 and 5.1).

I asked if they had devices like these (write-disabled and unresolved) and what version of ESXi and the answer was no and 5.5 respectively. So not that. Asked a few other fruitless questions and decided to work on something else for a bit to hopefully inspire an answer.

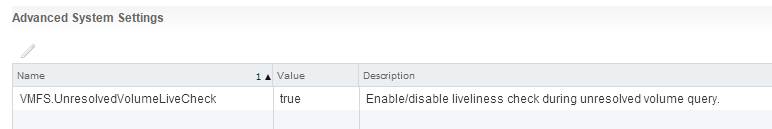

So I was working on something unrelated and happened to notice the VMFS.UnresolvedVolumeLiveCheck option. I had never really heard of it but it looked like it might be perfect.

Not a lot of information out there on it. But a quick Google search provided this blog post. Eureka! This was exactly what my customer was facing.

His post explains this quite well, but in short, ESXi performs a check on unresolved VMFS volumes prior to populating the device table to make sure these volumes are not presented (and force-mounted) and in use on another host. Because if they are, and you then try to resignature the volume would become invalid on the other host and the hosted VMs would go down. This behavior is enabled by default. Disabling this option turns off this behavior and skips this check, effectively speeding up the “Add Storage” wizard. Disabling this check solved my customers problem.

Of course, disabling this option should be evaluated. This is a SAFETY check, so unless this is really causing a problem I recommend not touching it. If you never force mount and you know it, you will be fine though without this check. Which is really what should be happening anyways.

<on soapbox> Unless you have a very good reason–do not force mount. </off soapbox>

Some notes…

So from what I could tell this option was introduced in the initial release of ESXi 5.1. I didn’t see it in my ESXi 5.0 U3 hosts or earlier from what I could tell.

Furthermore, I ran into some weirdness figuring out the default value. This option for all of my hosts except ESXi 6 were set to “true” (meaning they performed the check). ESXi 6 was set to false. I did some more research and found some cryptic references to a VMware KB article 2074047. The KB itself though seems to have been hidden.

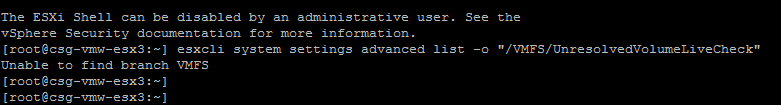

Usually a good way to find a default is to use the CLI as it will report the current value and the default. But to add to the weirdness, I can’t quite seem to find this option in the CLI which I think is strange. I ran the usual esxcli command “esxcli system settings advanced list” and didn’t see it listed.

Can’t say I’ve ever seen an option in the GUI but not in the CLI. Maybe it is just somewhere else.

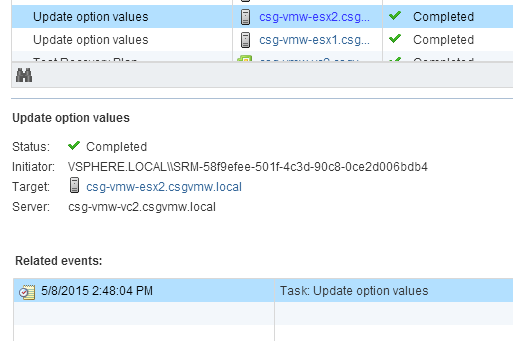

The aforementioned KB was called “vCenter Site Recovery Manager 5.5 VMFS.UnresolvedVolumeLiveCheck setting” and in fact all of my ESXi 6 hosts were indeed in my VMware vCenter Site Recovery Manager environment. So my guess was that maybe SRM was changing this value.

I found an ESXi 6 host that had not been touched by SRM and sure enough its VMFS.UnresolvedVolumeLiveCheck was enabled like my 5.x hosts. So it definitely looks like SRM alters this setting. I guess this setting affects API-based resignaturing operations too, not just the GUI wizard. Don’t see this behavior documented anywhere though. I am presuming it was in that now-hidden KB, so who knows why it is hidden now.

To verify, I re-enabled this setting on my ESXi 6 hosts and ran a SRM test failover to them. Indeed the setting was changed back to false.

So a long post to say that his option exists and helps, but I would like to know more about the interaction of it and SRM. Officially.